Categories

Open Source Prompt Engineering Large Language Models Model Management LLM AI Applications AI Workflow Backend Service Agent Framework RAG Pipeline LLMOps Monitoring and Analysis RAG Prompt Orchestration Agent Capabilities AI PlatformStars

Forks

Watchers

Developer links

Dify

Welcome to Dify, the open-source superhero of AI application development, where your dreams of building with large language models (LLMs) come true without the need for a cape or a PhD in rocket science! With Dify, you can orchestrate prompts visually—because who has time for cryptic code when you can drag and drop like a pro? It’s like having a personal assistant that not only manages your models but also keeps an eye on them, ensuring they don’t start plotting world domination on their own. Plus, with our RAG pipeline and agent capabilities, you can be the proud parent of your very own AI army, ready to tackle any task you throw their way. And let’s not forget about LLMOps, which sounds fancy but really just means you can monitor and analyze your models without breaking a sweat. So why pay an arm and a leg to the big cloud hosts when you can Dify your way to success for half the price? Join us and turn your AI aspirations into reality, one hilarious mishap at a time!

Benefits

- Accelerate AI Development

- Use a visual canvas and intuitive tools to build and test complex AI workflows without writing extensive code.

- Broad LLM Compatibility

- Integrate seamlessly with hundreds of LLMs from popular providers and open-source projects like GPT, Mistral, and Llama3.

- End-to-End RAG Support

- Handle the full RAG pipeline—from document ingestion to retrieval—with built-in document processing tools.

- Scalable Agent Capabilities

- Define agents using LLM Function Calling or ReAct and enhance them with 50+ pre-built tools including DALL·E, WolframAlpha, and more.

- Insightful Monitoring with LLMOps

- Analyze logs and app performance over time to improve prompts, datasets, and models in production environments.

- Flexible API Access

- Easily integrate Dify into existing systems with comprehensive Backend-as-a-Service APIs.

Features

- Visual Workflow Builder

- Design and test powerful AI workflows on an intuitive visual canvas.

- Model Integration

- Connect with a wide range of proprietary and open-source LLMs from various inference providers or self-hosted setups.

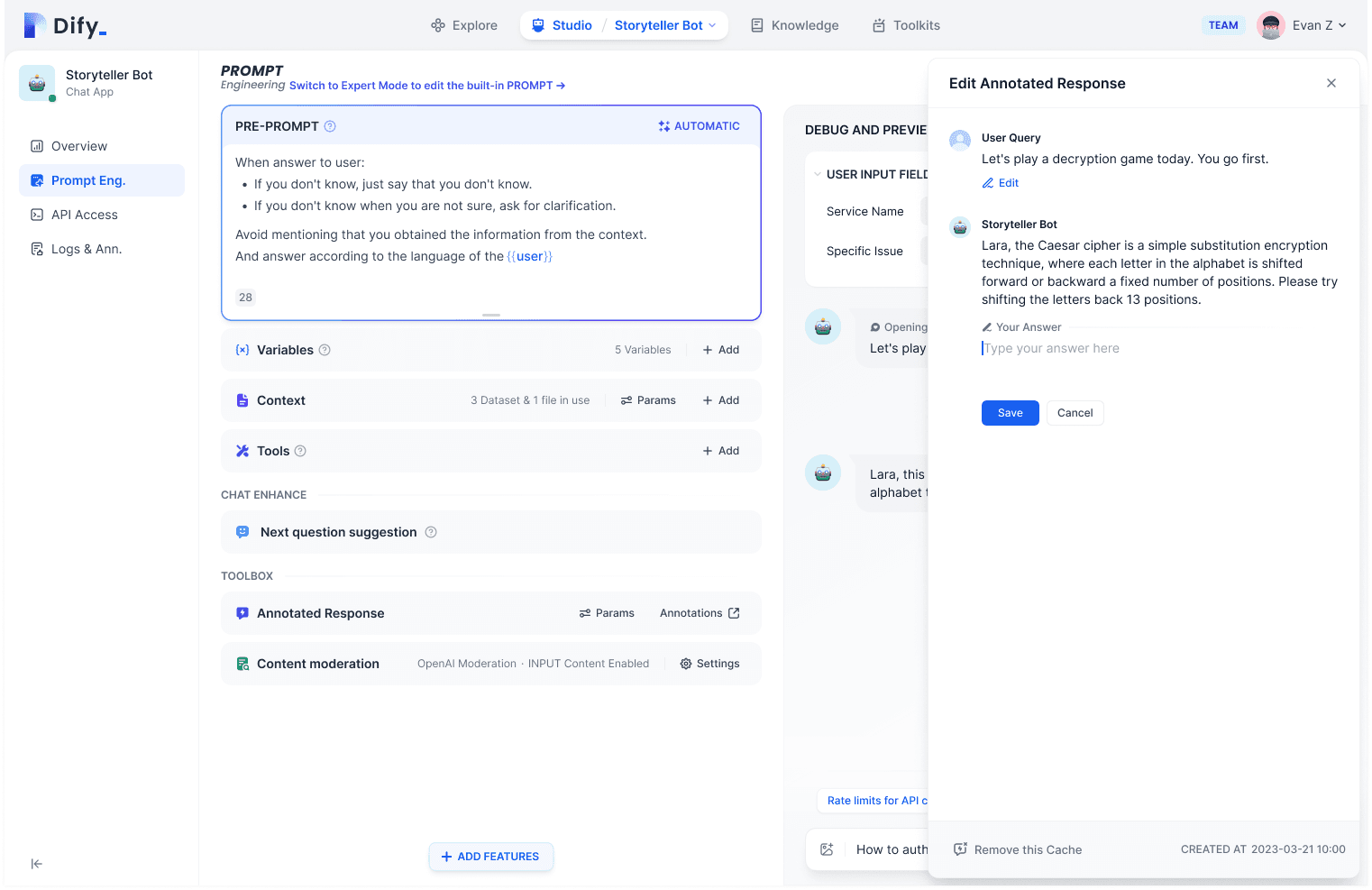

- Prompt IDE

- Craft and debug prompts with a dedicated interface that supports model performance comparison and feature additions like text-to-speech.

- RAG Pipeline

- Built-in support for end-to-end retrieval-augmented generation, including PDF and PPT document ingestion and processing.

- Agent System

- Build flexible agents using ReAct or function calling, supported by 50+ built-in tools and the ability to define custom ones.

- LLMOps Dashboard

- Access application logs, track performance metrics, and use production data to iterate and improve models continuously.

- Backend-as-a-Service APIs

- Every major Dify feature is accessible via API for easy integration into custom applications or business logic.